FCC Cumulative Leakage Index Testing

CLI testing, mandated in 47CFR76.611, was established to ensure that cable telecommunications operators do not inadvertently emit radio energy from their closed environments into sensitive segments of the on-air radio spectrum. This is necessary because of the harm these signals can cause to aeronautical, public safety and other critical radio traffic.As radio spectrum becomes increasingly crowded the FCC will continue to lower emission limits and increase enforcement. As wireless operators, first responders, military, and scientific uses continue to consume more spectrum, we can expect that all users will become more sensitive to unintentional emissions. It is in the Cable industry's best interests to ensure that its monitoring programs keep pace with advancing radio technologies and spectrum users.

Spectraq's Innovative LDR Technology

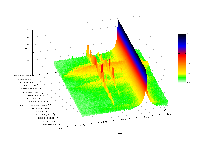

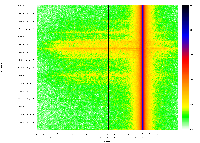

Spectraq's approach to CLI testing is truly revolutionary. Rather than taking a simplistic fixed-frequency power measurement once per second, we record spectrum in widths of up to 110MHz at a time, at rates in the megasamples per second. We record all signals present plus the noise floor. Why is this important? Because high noise environments will artifically inflate leakage powers, and large signal sources can overload simple receivers in ways that are difficult to detect. Spectraq's LDR system easily identifies and eliminates these error terms.Spectraq's LDR systems record the full information content of the spectrum. This means that we can tell if a signal is modulated with an FM tone, or is pure CW, or is an NTSC-modulated video carrier, just to name a few. We can also measure full-bandwidth digital signals such as DOCSIS 1, 2, and 3. We can detect these signals even if they are mixed with on-air signals -- a typical occurance, since the radio spectrum is nearly completely full with licensed transmissions.

Legacy Systems Exposed

Historically, CLI measurements have been made using traditional narrowband analog audio radio receivers. These receivers are usually polled once per second. The output is a basic single-frequency-point detector power or voltage measurement. These data points are usually combined with once-per-second latitude and longitude data from a GPS receiver.These 1980's era systems can be easily fooled by large pulsed signals, high noise environments, tuning drift, and other similar errors. They are difficult to calibrate correctly and they cannot include any active reference calibration signals to ensure measurement accuracy throughout the entire test period.

The extremely poor sample rate used by these legacy systems require that aircraft fly slowly. The faster the plane flies, the farther the distance between these once-per-second samples. The farther these points are spread, the less accurately leaks can be correlated to a fixed ground position. For example, if the plane flies at 140mph ground speed, each sample is spaced by more than 200ft. In contrast, at the same 140mph speed, Spectraq's LDR system spaces data points 1/3 inch.

Another issue with most legacy systems is that they do not record their altutude. While pilots are instructed to fly at a nominal 1,500ft AGL, they often cannot due to other air traffic, terrain, and obstacles such as towers. Varying between 1,200ft and 2,200ft can introduce several dB of error -- this is enough uncertainty to fail an audit for lack of sensitivy when flying too high, or by incorrectly overreporting problems when flying too low.